mNo edit summary |

mNo edit summary |

||

| (7 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

{{DISPLAYTITLE:CKB - Cluster Knowledge Base}} | {{DISPLAYTITLE:CKB - Cluster Knowledge Base}} | ||

The '''CKB - Cluster Knowledge Base (also called Entity Knowledge Base)''' it’s the [https://wiki.share-vde.org/wiki/ShareDoc:PublicDocumentation/LODPlatform LOD Platform] database of linked data entities. It's a source of high quality data including the clusters of entities created in the reconciliation and conversion of bibliographic and authority data to the entity-relationship model in use in the LOD Platform framework. | The '''CKB - Cluster Knowledge Base (also called Entity Knowledge Base)''' it’s the [https://wiki.share-vde.org/wiki/ShareDoc:PublicDocumentation/LODPlatform LOD Platform] database of linked data entities. It's a source of high quality data including the clusters of entities created in the reconciliation and conversion of bibliographic and authority data to the entity-relationship model in use in the LOD Platform framework. | ||

| Line 19: | Line 17: | ||

* [https://wiki.share-vde.org/wiki/ShareDoc:PublicDocumentation/LODPlatform#The_LOD_Platform_workflow ShareDoc:PublicDocumentation/LODPlatform#The LOD Platform workflow] | * [https://wiki.share-vde.org/wiki/ShareDoc:PublicDocumentation/LODPlatform#The_LOD_Platform_workflow ShareDoc:PublicDocumentation/LODPlatform#The LOD Platform workflow] | ||

* [https://wiki.share-vde.org/wiki/ShareDoc:PublicDocumentation/LODPlatform/Workflow ShareDoc:PublicDocumentation/LODPlatform/Workflow] | * [https://wiki.share-vde.org/wiki/ShareDoc:PublicDocumentation/LODPlatform/Workflow ShareDoc:PublicDocumentation/LODPlatform/Workflow] | ||

=== Entity model and Cluster Knowledge Base === | |||

The LOD Platform currently supports the latest version of the BIBFRAME ontology, and will upgrade the MARC-to-BIBFRAME mapping to the most up to date version. The use of BIBFRAME within the Share Family initiative has been extended to guarantee interoperability with IFLA LRM and with applications not based on BIBFRAME. The [https://zenodo.org/doi/10.5281/zenodo.8332350 '''SVDE Ontology'''] is the result of work that has been carried on by the [https://wiki.share-vde.org/wiki/ShareFamily:NewsAndUpdates#Share_Family_Community_updates SEI - Sapientia Entity Identification Working Group], with the '''svde:Opus entity being the most prominent extension of the ontology'''.[[File:opus-work-model UpdateOpusTypes VersioneMia.drawio.png|none|thumb|536x536px]] | |||

Therefore, the SVDE Ontology supports the Share Family of initiatives (based in federated linked data discovery environments) and is developed as an extension to BIBFRAME. The output of the SEI Working Group that is shaping the SVDE ontology is being incorporated in the LOD Platform components, e.g. the OpusType property is available in the test environment and can be used in the JCricket entity editor. The outline of the SVDE ontology is described in this presentation article https://doi.org/10.5281/zenodo.8332350. | |||

[[File:Opus type.png|none|thumb|527x527px]] | |||

== Clusters, Prisms, Entities == | == Clusters, Prisms, Entities == | ||

| Line 36: | Line 40: | ||

[[File:a cluster = an entity = a prism.png|none|thumb|457x457px]] | [[File:a cluster = an entity = a prism.png|none|thumb|457x457px]] | ||

Each record coming from a provenance (= contirbuting institution) contributes in building/enriching one or more entities. An entity can be considered as a prism where each facet represents data coming from a given provenance. Each entity / cluster maintains a link to the records it originated from. | |||

[[File:From Library Data to Entities.png|none|thumb|451x451px]] | |||

[[File:Prism and facets.png|none|thumb|451x451px]] | |||

The final result of such articulated processes is a complex entity, rich in information from many different sources: the set of these clusters, these "prisms", feeds the CKB that each tenant of the Share Family uses as a cornerstone for the creation and the management of an ecosystem | The final result of such articulated processes is a complex entity, rich in information from many different sources: the set of these clusters, these "prisms", feeds the CKB that each tenant of the Share Family uses as a cornerstone for the creation and the management of an ecosystem entirely based on entities and no longer on the MARC records. | ||

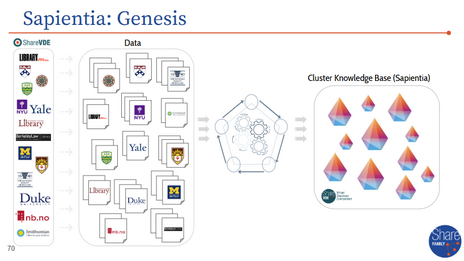

[[File:Sapientia Genesis.png|none|thumb|464x464px]] | [[File:Sapientia Genesis.png|none|thumb|464x464px]] | ||

== CKB pipelines and the evolution of the conversion process == | |||

== CKB pipelines and the evolution of the conversion | |||

Until now, the support of linked data conversion from multiple input formats has been possible at the high cost of having multiple conversion pipelines. | Until now, the support of linked data conversion from multiple input formats has been possible at the high cost of having multiple conversion pipelines. | ||

In line with the evolution of the LOD Platform system over time, it became clear that a single “source of truth” is needed to orchestrate a conversion workflow as frictionless as possible. So, the current conversion | In line with the evolution of the LOD Platform system over time, it became clear that a single “source of truth” is needed to orchestrate a conversion workflow as frictionless as possible. So, the current conversion process aims to streamline this process by extending input data capabilities and making the RDFizer component format-agnostic. | ||

To achieve this, several analysis and implementation actions must be undertaken to merge the two current conversion pipelines. | To achieve this, several analysis and implementation actions must be undertaken to merge the two current conversion pipelines. | ||

| Line 58: | Line 62: | ||

* enhancement of the RDFizer conversion component that will read the data only from the CKB where all the LOD Platform entities reside. | * enhancement of the RDFizer conversion component that will read the data only from the CKB where all the LOD Platform entities reside. | ||

As a result, the new conversion | As a result, the new conversion process will support: | ||

* a finer granularity level of the CKB in compliance with MARC and BIBFRAME granularity; | * a finer granularity level of the CKB in compliance with MARC and BIBFRAME granularity; | ||

* a “format-agnostic” CKB with extended input data capabilities to converge all input formats into one conversion source (eg. MARC21, UNIMARC, native BIBFRAME/RDF eg. from LD4P Sinopia application profiles etc.); | * a “format-agnostic” CKB with extended input data capabilities to converge all input formats into one conversion source (eg. MARC21, UNIMARC, native BIBFRAME/RDF eg. from LD4P Sinopia application profiles etc.); | ||

* one single conversion pipeline from the CKB – removing the conversion pipeline based on MARC. | * one single conversion pipeline from the CKB – removing the conversion pipeline based on MARC. | ||

[[File:Target conversion pipeline.png|none|thumb|466x466px]] | |||

== Granularization of the CKB == | == Granularization of the CKB == | ||

| Line 80: | Line 85: | ||

After completing the work on these vocabularies, they will be made available on JCricket. | After completing the work on these vocabularies, they will be made available on JCricket. | ||

The expected outcome of this | The expected outcome of this process is the advancement of the CKB technology to enable simpler data processing and effectiveness of conversion results, and to allow for more efficient performance of clustering procedures, ultimately increasing the added value of one of the key features of the LOD Platform system. | ||

__INDEX__ | __INDEX__ | ||

Latest revision as of 13:02, 20 January 2026

The CKB - Cluster Knowledge Base (also called Entity Knowledge Base) it’s the LOD Platform database of linked data entities. It's a source of high quality data including the clusters of entities created in the reconciliation and conversion of bibliographic and authority data to the entity-relationship model in use in the LOD Platform framework.

It serves as a source of truth for managing high-quality bibliographic and authority descriptions, offering a format-agnostic and highly interoperable solution that seamlessly integrates also with environments external to the LOD Platform, such as ILS / LSP and other linked data-based environments.

The data output of the CKB is available in RDF, the framework designed as a data model for metadata by the World Wide Web Consortium (W3C).

The CKB in the LOD Platform workflow

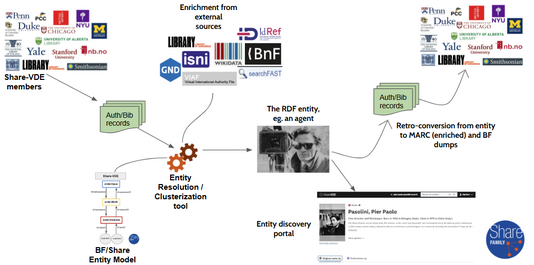

The Cluster Knowledge Base on PostgreSQL database and the corresponding RDF version are the result of the data processing and enrichment procedures with external data sources for each entity. The CKB is populated with clusters of all the linked data entities that are created by LOD Platform processes: typically, clusters of Agents (authorized and variant forms of the names of Persons, Organisations, Conferences, Families) and clusters of Works (authorized access points and variant forms for the titles of the Works and Instances), but also Subjects, Places, Genre and other entities. Such clusters derive from the reconciliation and clustering of the bibliographic and authority records (both records internal to the library system and from external sources) to form groups of resources that are converted to linked data to represent a real world object.

The CKB is the pool where new entities are collected, as the clustering processes go along. The CKB is the authoritative source of the system and it’s available both on the relational database PostgreSQL (mostly for internal maintenance purposes, reports etc.), as well as in RDF in order to be used for the Entity Discovery Interface (the end-users portal) and public exposure. The CKB is updated both through automated procedures, as well as through manual actions via JCricket, the entity editor. Each change performed on the CKB (both manual and automatic) is reported in the Entity registry, that has the key role of keeping track of every variation of the resource URI, in order to guarantee the effective and broad sharing of resources.

The role of the CKB in the LOD Platform workflow is outlined in the sections:

- ShareDoc:PublicDocumentation/LODPlatform#The LOD Platform workflow

- ShareDoc:PublicDocumentation/LODPlatform/Workflow

Entity model and Cluster Knowledge Base

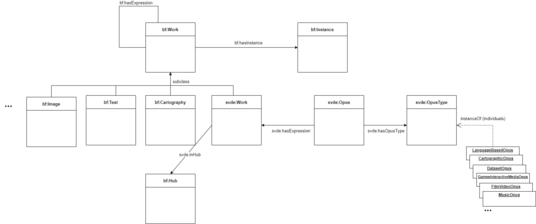

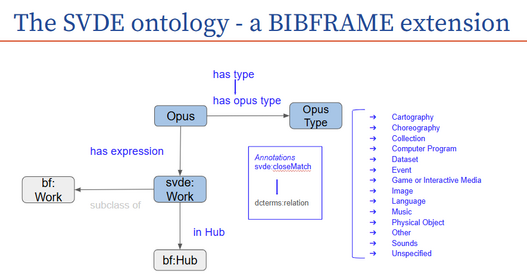

The LOD Platform currently supports the latest version of the BIBFRAME ontology, and will upgrade the MARC-to-BIBFRAME mapping to the most up to date version. The use of BIBFRAME within the Share Family initiative has been extended to guarantee interoperability with IFLA LRM and with applications not based on BIBFRAME. The SVDE Ontology is the result of work that has been carried on by the SEI - Sapientia Entity Identification Working Group, with the svde:Opus entity being the most prominent extension of the ontology.

Therefore, the SVDE Ontology supports the Share Family of initiatives (based in federated linked data discovery environments) and is developed as an extension to BIBFRAME. The output of the SEI Working Group that is shaping the SVDE ontology is being incorporated in the LOD Platform components, e.g. the OpusType property is available in the test environment and can be used in the JCricket entity editor. The outline of the SVDE ontology is described in this presentation article https://doi.org/10.5281/zenodo.8332350.

Clusters, Prisms, Entities

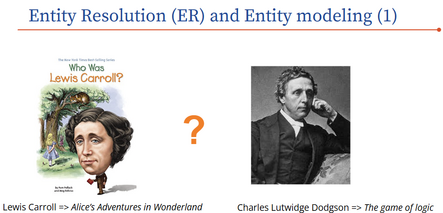

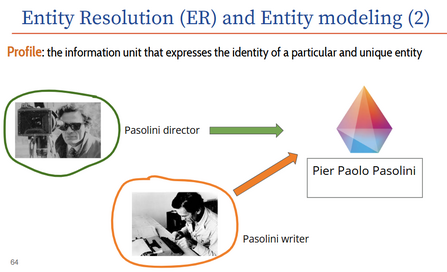

The CKB relies on the entity identification and resolution and clusterization processes: starting from input MARC bibliographic and authority records and from external sources available for matching processes, entities (works, agents, subjects, places etc.) are identified and assigned persistent URIs.

The same entity is usually represented in different ways, both within the same database (as in the case of pseudonyms, for example) and in different databases. The entity we describe in the various information systems is always much more complex than the way we represent it. The LOD Platform provides entity resolution algorithms starting from structured data such as library records: the set of information that expresses the identity of a particular real world entity is a sort of profile for that entity. The more numerous these "profiles" are, the higher the chances of a correct entity identification.

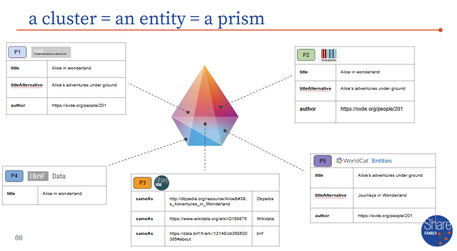

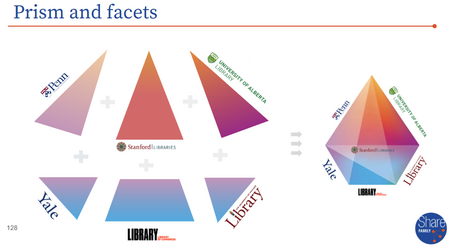

The entity, which we are representing as a prism, is the result of the set of profiles from the many sources used.

The entity resolution and clustering process generate an entity database. The attributes and relationships that identify an entity are recorded for consumption (research, statistics, etc.) or even to iterate the processes of "entification" and make them increasingly effective.

The relationship between the entity (and each triple) and the source record that contributed to its creation is always maintained through the Provenance, in order to maintain the relationship with the data that generated a prism over time and allow to manage the entity both in automated update processes and in manual processes (via JCricket entity editor). See also ShareDoc:PublicDocumentation/LODPlatform/EntityEditor#Provenance and Prism.

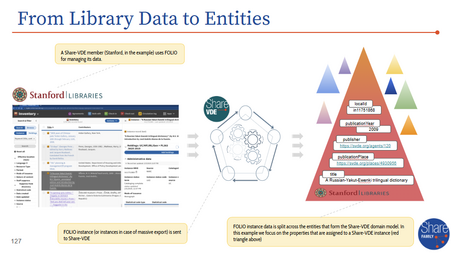

Each record coming from a provenance (= contirbuting institution) contributes in building/enriching one or more entities. An entity can be considered as a prism where each facet represents data coming from a given provenance. Each entity / cluster maintains a link to the records it originated from.

The final result of such articulated processes is a complex entity, rich in information from many different sources: the set of these clusters, these "prisms", feeds the CKB that each tenant of the Share Family uses as a cornerstone for the creation and the management of an ecosystem entirely based on entities and no longer on the MARC records.

CKB pipelines and the evolution of the conversion process

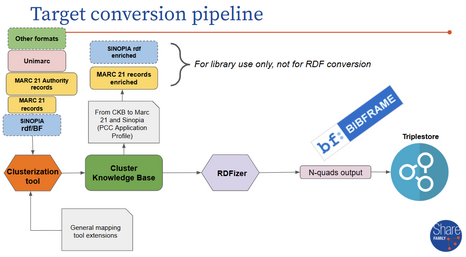

Until now, the support of linked data conversion from multiple input formats has been possible at the high cost of having multiple conversion pipelines.

In line with the evolution of the LOD Platform system over time, it became clear that a single “source of truth” is needed to orchestrate a conversion workflow as frictionless as possible. So, the current conversion process aims to streamline this process by extending input data capabilities and making the RDFizer component format-agnostic.

To achieve this, several analysis and implementation actions must be undertaken to merge the two current conversion pipelines.

Here follows the summary of the major tasks entailed, where the granularization of the CKB is a crucial factor of success:

- comparison of existing conversion pipelines and sources of input data (ie. MARC files enriched with URIs and the data elements stored in the Cluster Knowledge Base);

- extension of the CKB data structure and mapping tool to accept new data elements in a format-agnostic fashion;

- enrichment of the CKB data structure with data elements reflecting the wealth of information and granularity of MARC format (the so-called granularization);

- enhancement of the RDFizer conversion component that will read the data only from the CKB where all the LOD Platform entities reside.

As a result, the new conversion process will support:

- a finer granularity level of the CKB in compliance with MARC and BIBFRAME granularity;

- a “format-agnostic” CKB with extended input data capabilities to converge all input formats into one conversion source (eg. MARC21, UNIMARC, native BIBFRAME/RDF eg. from LD4P Sinopia application profiles etc.);

- one single conversion pipeline from the CKB – removing the conversion pipeline based on MARC.

Granularization of the CKB

A key aspect of LOD Platform data management is the ability to manage data at a very fine granularity level. The aim is to have the CKB as a single source of truth for the LOD Platform and Share Family installations. This will improve:

- a “format-agnostic” CKB where all input formats converge into one conversion source (eg. MARC21, UNIMARC, native BIBFRAME/RDF eg. from LD4P Sinopia application profiles…);

- improved conversion from MARC to BIBFRAME and vice versa;

- single conversion pipeline from the CKB – removing the conversion pipeline based on MARC.

In this context we are streamlining the management of attributes through controlled vocabularies instead of literals. This is being achieved by:

- defining each controlled vocabulary in collaboration with the Sapientia Entity Identification Working Group;

- enriching each vocabulary with external authoritative sources (RDA, Library of Congress, FINTO…);

- clustering of controlled vocabularies and assignment of a URI to each value.

After completing the work on these vocabularies, they will be made available on JCricket.

The expected outcome of this process is the advancement of the CKB technology to enable simpler data processing and effectiveness of conversion results, and to allow for more efficient performance of clustering procedures, ultimately increasing the added value of one of the key features of the LOD Platform system.