mNo edit summary |

mNo edit summary |

||

| (7 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

{{DISPLAYTITLE:The LOD Platform Technology}} | {{DISPLAYTITLE:The LOD Platform Technology}} | ||

' | This section explores the critical components forming the bedrock of the LOD Platform, the technology underpinning the Share Family system. In this section you can find details on the essential elements driving our ecosystem's functionality and innovation. | ||

The LOD - Linked Open Data Platform is a highly innovative technological framework, an integrated ecosystem for the management of bibliographic, archive and museum catalogues, and their conversion to linked data according to the BIBFRAME ontology version 2.0 (https://www.loc.gov/bibframe/docs/bibframe2-model.html), extensible as needed for specific purposes. | At its core, the LOD Platform relies on a sophisticated framework designed to facilitate seamless data management, collaboration, and knowledge dissemination. Within this framework, key components such as the Share-VDE entity model, JCricket entity editor, and the dynamics of data flow within the Share-VDE and Share Family systems are examined. | ||

The Share-VDE entity model serves as the foundation for data representation and interaction, while JCricket streamlines editing operations and enhances the quality of machine created data across various domains. Understanding these components is crucial for optimizing performance and maintaining data integrity within the Share Family ecosystem. | |||

Furthermore, you can find more information on the role of the Cluster Knowledge Base and Provenance and on how they contribute to knowledge aggregation, organization, and traceability. | |||

Lastly, insights into the technological stack supporting the Share Family offer a comprehensive overview of the tools, frameworks, and technologies employed to construct and maintain this robust infrastructure. | |||

'''For more details on the specific terminology used, refer to [https://wiki.share-vde.org/wiki/ShareDoc:Glossary ShareDoc:Glossary]'''. | |||

== Introduction == | |||

The LOD - Linked Open Data Platform is a highly innovative technological framework, an integrated ecosystem for the management of bibliographic, archive and museum catalogues, and their conversion to linked data according to the BIBFRAME ontology version 2.0 (https://www.loc.gov/bibframe/docs/bibframe2-model.html), extensible as needed for specific purposes. | |||

The core of the LOD Platform was designed in the EU-funded project ALIADA, with the idea of creating a scalable and configurable framework able to adapt to ontologies from different domains, capable of automating the entire process of creating and publishing linked open data, regardless of the data source format. | The core of the LOD Platform was designed in the EU-funded project ALIADA, with the idea of creating a scalable and configurable framework able to adapt to ontologies from different domains, capable of automating the entire process of creating and publishing linked open data, regardless of the data source format. | ||

| Line 20: | Line 31: | ||

* the publication of the dataset in linked data on RDF storage (triplestore); | * the publication of the dataset in linked data on RDF storage (triplestore); | ||

* the creation of a discovery portal with a web user interface based on BIBFRAME or other ontologies defined in specific projects. | * the creation of a discovery portal with a web user interface based on BIBFRAME or other ontologies defined in specific projects. | ||

== High level steps == | == High level steps == | ||

| Line 46: | Line 55: | ||

There are several advantages: | There are several advantages: | ||

* integration of the processes of a collaborative environment with local systems and tools; | * integration of the processes of a collaborative environment with local systems and tools, including ILS / LSP; | ||

* integration into the semantic web while maintaining ownership and control of the data, benefiting from the simplified administration of the environment and a large pool of data; | * integration into the semantic web while maintaining ownership and control of the data, benefiting from the simplified administration of the environment and a large pool of data; | ||

* integration of library/archive/museum data into the collaborative environment and pool of data; | * integration of library/archive/museum data into the collaborative environment and pool of data; | ||

| Line 136: | Line 145: | ||

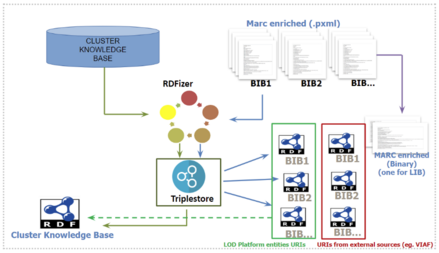

[[File:LODPlatform Graph 3 – The Cluster Knowledge Base RDF conversion and different deliverables.png|thumb|439x439px|none|Graph 3 – The Cluster Knowledge Base RDF conversion and different deliverables]] | [[File:LODPlatform Graph 3 – The Cluster Knowledge Base RDF conversion and different deliverables.png|thumb|439x439px|none|Graph 3 – The Cluster Knowledge Base RDF conversion and different deliverables]] | ||

=== Data updates === | === Data updates === | ||

| Line 162: | Line 172: | ||

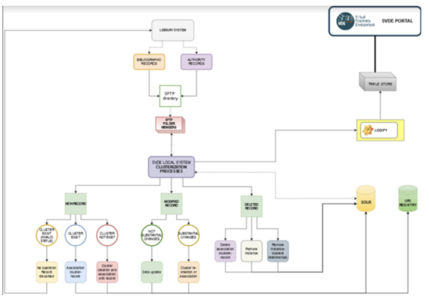

[[File:LODPlatform Data flow for the elaboration of the delta updates.png|none|thumb|429x429px|Data flow for the elaboration of the delta update records in the LOD Platform system (this diagram specifically refers to the Share-VDE tenant flow).]] | [[File:LODPlatform Data flow for the elaboration of the delta updates.png|none|thumb|429x429px|Data flow for the elaboration of the delta update records in the LOD Platform system (this diagram specifically refers to the Share-VDE tenant flow).]] | ||

== [https://wiki.share-vde.org/wiki/ | == [https://wiki.share-vde.org/wiki/ShareDoc:PublicDocumentation/LODPlatform/Workflow LOD Platform workflow components] == | ||

== [https://wiki.share-vde.org/wiki/ | == [https://wiki.share-vde.org/wiki/ShareDoc:PublicDocumentation/LODPlatform/EntityEditor JCricket Entity Editor and Shared Cataloguing Tool] == | ||

== [https://wiki.share-vde.org/wiki/ | == [https://wiki.share-vde.org/wiki/ShareDoc:PublicDocumentation/LODPlatform/DiscoveryInterface LOD Platform Entity Discovery Portal] == | ||

__FORCETOC__ | __FORCETOC__ | ||

Latest revision as of 07:09, 31 July 2024

This section explores the critical components forming the bedrock of the LOD Platform, the technology underpinning the Share Family system. In this section you can find details on the essential elements driving our ecosystem's functionality and innovation.

At its core, the LOD Platform relies on a sophisticated framework designed to facilitate seamless data management, collaboration, and knowledge dissemination. Within this framework, key components such as the Share-VDE entity model, JCricket entity editor, and the dynamics of data flow within the Share-VDE and Share Family systems are examined.

The Share-VDE entity model serves as the foundation for data representation and interaction, while JCricket streamlines editing operations and enhances the quality of machine created data across various domains. Understanding these components is crucial for optimizing performance and maintaining data integrity within the Share Family ecosystem.

Furthermore, you can find more information on the role of the Cluster Knowledge Base and Provenance and on how they contribute to knowledge aggregation, organization, and traceability.

Lastly, insights into the technological stack supporting the Share Family offer a comprehensive overview of the tools, frameworks, and technologies employed to construct and maintain this robust infrastructure.

For more details on the specific terminology used, refer to ShareDoc:Glossary.

Introduction

The LOD - Linked Open Data Platform is a highly innovative technological framework, an integrated ecosystem for the management of bibliographic, archive and museum catalogues, and their conversion to linked data according to the BIBFRAME ontology version 2.0 (https://www.loc.gov/bibframe/docs/bibframe2-model.html), extensible as needed for specific purposes.

The core of the LOD Platform was designed in the EU-funded project ALIADA, with the idea of creating a scalable and configurable framework able to adapt to ontologies from different domains, capable of automating the entire process of creating and publishing linked open data, regardless of the data source format.

The aim of this framework is to open the possibilities offered by linked data to libraries, archives and museums by providing greater interoperability, visibility and availability for all types of resources.

The application of the LOD Platform obviously requires the careful analysis of the standards, formats and models used in the institution addressed; however, its coverage, based on BIBFRAME 2.0 as core ontology, can be enriched with a suite of additional ontologies, such as Schema.org, Prov-O, MADS, RDFS, LC vocabularies, RDA vocabularies and so on; it’s extremely flexible and allows for the implementation of additional ontologies, vocabularies and modelling according to specific needs.

By incorporating standards, models and technologies recognized as key elements for the creation of new processes of management and use of knowledge, the LOD Platform allows:

- the creation of a data structure based on Agent, Work, Instance, Item, Place entities, as defined by BIBFRAME, and extensible to reconcile other entities;

- data enrichment through the connection with external data sources;

- reconciliation and clusterization of entities created from the original data;

- the conversion of data according to the standard model indicated by the W3C for the LOD, RDF - Resource Description Framework;

- delivery of converted and enriched data to the target institution for reuse in their systems;

- the publication of the dataset in linked data on RDF storage (triplestore);

- the creation of a discovery portal with a web user interface based on BIBFRAME or other ontologies defined in specific projects.

High level steps

In the implementation of a system that uses the LOD Platform, data from libraries, archives and museums are transformed into linked data through entity identification, reconciliation and enrichment processes.

Attributes are used to uniquely identify a person, work or other entity, with variant forms reconciled to form a cluster of data referring to the same entity. The data are subsequently reconciled and enriched with further external sources, to create a network of information and resources. The result is an open relationship database and Entities Knowledge Base in RDF.

The database uses the semantic web paradigms but allows the target institution to manage their data independently, and is able to provide:

- enrichment of data with URIs, both for the original library records and for the output linked data entities; examples of sources for URI enrichment are ISNI, VIAF, FAST, GeoNames, LC Classification, LCSH, LC NAF, Wikidata;

- conversion of data to RDF using the BIBFRAME vocabulary and other ontologies;

- creation of a virtual discovery platform with web user interface;

- creation of a database of relationships and clusters accessible in RDF through a triplestore;

- implementation of tools for direct interaction with the data, permitting the validation, update, long-term control and maintenance of the clusters and of the URIs identifying the entities (see below);

- batch/automated data updating procedures;

- batch/automated data dissemination to libraries.

- progressive implementation of additional workflows such as API for ILS, back-conversion for local acquisition and administration systems, reporting.

The goal is to ensure that a large amount of data, which often remains hidden or unexpressed in closed silos (“containers”), finally reveals its richness within existing collections.

Benefits

The LOD Platform, developed according to the principle of functionality, provides various environments and interfaces for the creation and enrichment of data and offers workflows capable of responding to the different needs of librarians / archivists / museum operators, professionals, scholars, researchers and participating students.

There are several advantages:

- integration of the processes of a collaborative environment with local systems and tools, including ILS / LSP;

- integration into the semantic web while maintaining ownership and control of the data, benefiting from the simplified administration of the environment and a large pool of data;

- integration of library/archive/museum data into the collaborative environment and pool of data;

- standards and infrastructures for "future-proof" data, ie ensuring that they are compatible with the structure of linked data and the semantic web;

- enrichment of data with further information and relationships not previously expressed in the established metadata formats in use (e.g. MARC), increasing the possibilities of discovery for all types of resources;

- create an environment that is useful for both end users and professionals (librarians, archivists, museum operators);

- allow librarians a wider and direct interaction with and editing of linked data entities through the entity editor (more details in the next section);

- advanced search interfaces to improve the user experience and provide broader search results to users;

- reveal data that would otherwise have remained hidden in silos, allowing end users to access a large amount of information that can be both imported and exported by the library.

This approach fully harnesses the potential of linked data, connecting library information to the advantage of scholars, patrons and all library users in a dynamic research environment that unlocks new ways of accessing knowledge.

Added values

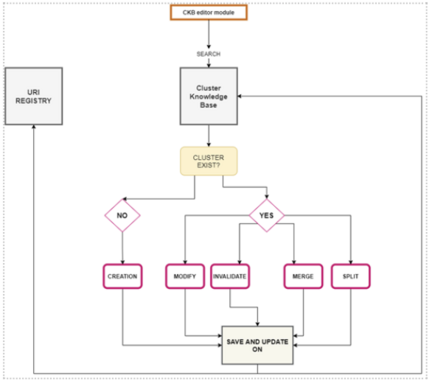

It’s particularly relevant to highlight that the LOD Platform is currently being enhanced with a module dedicated to edit and update entities in the Entities Knowledge Base. This entity editor has been named JCricket, and is conceived as a collaborative environment with different levels of access and interaction with the data, enabling several manual and automatic actions on the clusters of entities saved in the database, including creation, modification, merge of clusters of works, of agents etc.

JCricket consists of two main layers:

- automatic checks and update of the data performed by the LOD system;

- manual checks and edit of the data performed by the user through a web interface.

All changes to entities, both automatic and manual, are reported on the Entity Registry, a source (also available in RDF) that tracks the updates of each entity, especially when this has an impact on the persistent entity URI.

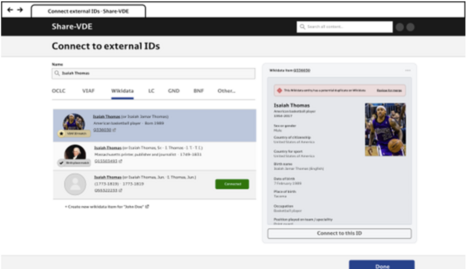

A further added value is the ability of the LOD Platform to interact directly with external data sources such as ISNI and Wikidata. The interaction with Wikidata is currently under analysis and will be triggered from the entity editor itself, allowing the search from the editor into Wikidata and the enrichment of the LOD Platform entities with information from Wikidata and vice versa. This way the editor will allow for the creation of new identifiers both in the external data sources (where possible or applicable) and in the Entities Knowledge Base.

How the LOD Platform works

The developed components and tools aim to create a useful environment for knowledge management, with advanced search interfaces to improve the user experience and provide wider results to libraries, archives, museums and their users:

- Authify: a RESTFul module that provides search and full-text services of external datasets (downloaded, stored and indexed in the system), mainly related to Authority files (VIAF, Library of Congress Name Authority files etc.) that can also be extended to other types of datasets. It consists of two main parts: a SOLR infrastructure for indexing the datasets and related search services, and a logical level that orchestrates these services to find a match within the clusters of the entities.

- Entities Knowledge Base, on PostgreSQL database, is the result of the data processing and enrichment procedures with external data sources for each entity; typically: clusters of Agents (authorized and variant forms of the names of Persons, (Corporate Bodies, Families) and clusters of titles (authorized access points and variant forms for the titles of the Works). The Entities Knowledge Base, contains other entities produced through identification and clustering processes (such as places, roles, languages, etc.)

- RDFizer: a RESTFul module that automates the entire process of converting and publishing data in RDF according to the BIBFRAME 2.0 ontology in a linear and scalable way. It is flexible and can be adapted to multiple situations: it allows, therefore, to manage the classes and properties not only of BIBFRAME but also of other ontologies as needed.

- Triple store: the LOD Platform can currently be integrated with two different types of triple stores: one open source (Blazegraph), more suitable for small or medium-sized projects (up to about 2,000,000 bibliographic records), and a commercial one, more suitable for larger datasets, such as Neptune, supporting RDF and SPARQL. The latter can be considered a valid alternative since it is integrated with Amazon Web Services infrastructure already in use for the whole system, and the whole LOD Platform has already migrated Neptune; therefore this solution can provide better performance.

- Discovery portal: data presentation portal, for retrieving and browsing data in a user-friendly discovery interface.

The data processing pipeline in a system using the LOD Platform

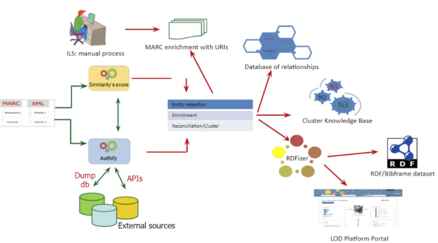

The diagrams in the next paragraph illustrate the high-level workflow for the data processing cycle in the LOD Platform, from the delivery of original records to the publication on the web portal. The workflow diagrams have demonstrative purposes, but they express the overall steps of the process.

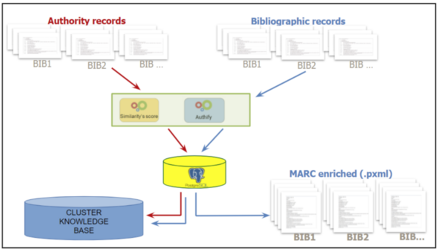

Starting from the left of graph 1, the data are imported from the library/the target institution (libraries, archives, museums etc.) in different formats (eg. MARC, Dublin Core, xml etc.). The type of data can be bibliographic and authority.

The data received are processed according to Text analysis and String-matching processes (represented in the "Similarity's score" box), to identify the Entities included in the 'flat' texts (records), and prepare the creation of clusters of entities.

This entity identification process is enhanced and extended through similar Text analysis and String matching processes launched on external sources (VIAF, ISNI, LC-NAF, GND, LCSH, Nuovo soggettario etc.), through the Authify framework: these processes generate the enrichment of the data with other variant forms coming from external sources and with the URIs through which the same entity is identified on these sources (reconciliation): the original cluster is enriched and will allow, in the process of conversion to linked data, to activate the function of interlinking, essential for sharing and reusing data on the web.

The result of these processes is threefold:

- Identification of entities;

- Data enrichment;

- Cluster/entity creation through reconciliation processes.

The data thus obtained are ready to be processed again, through different channels:

- manual enrichment and quality check (in the event that the library requests a specific service from external agencies - such as Casalini libri - or internally manages the enriched data received);

- extraction of “hidden” relations for the generation and feeding of a database of relations (which will be reused in possible subsequent steps to enrich the data and in the publication stages, to extend the links between data);

- creation of the Entities Knowledge Base, available in RDF (therefore as Linked Open Data) and accessible via an end point for SPARQL and API queries;

- processing and conversion to RDF, following the BIBFRAME model with extensions provided by the Share community (SVDE ontology, see below) and/or other ontologies and schemas proposed in the specific project.

At the end of these processes, the data is ready to be indexed on the discovery portal and published on various sites, in RDF. It’s also available to be enhanced/increased through the entity editor JCricket.

Graphs 2 and 3 show more in detail some steps of the overall workflow shown in graph 1.

The LOD Platform workflow

Data updates

The LOD Platform is able to manage entities created with data from internal and external sources, using different approaches that depend on the data source (update/change management processes via SFTP file exchange, availability of OAI-PMH or other protocols, periodically update of the dump available for the web community etc.). The choice of the approach depend on the use case. In addition to this, JCricket editor will allow to manage entities data manually, by authorized persons, using a friendly user interface to enhance the data quality.

Here follows an outline of the automated processes that have already been implemented for any Share Family project.

Delta updates

By “delta” update we mean the changes that occur to the library records that are periodically pushed to the LOD Platform, to be published on the discovery portal. The automation of the ingestion in the LOD Platform of updated library records has the purpose of regularly updating the data available through the discovery interface and the other endpoints of the workflow where the data are available. This means updating the data of the clustered entities and the related resources searchable on the discovery interface and in the triplestore, according to the frequency requested by the library.

Steps of the process for update/change management via SFTP file exchange:

- the library delivers bibliographic and authority records to the agreed SFTP directory, in the sub-directory dedicated to their institution. The system expects to receive from each library only the delta of their records, i.e. only those records that have been changed or added or deleted, compared to the previous dispatch;

- a running script processes the records in sequential order, by file name, and accepts in input .mrc (for new and modified records) and .txt files (for deleted records). Additional input file formats are included in the workflow in case the library manages them in the regular/daily data handling;

- ingestion of library MARC records in the system: after original records are uploaded from the library to the SFTP server, a script running regularly connects the Share internal system to the individual SFTP folders of the library, checks if a new file has been uploaded to the SFTP and downloads the MARC records in the system. Therefore, the files submitted by the library are automatically transferred from the SFTP sub-directory of the institution that has uploaded the files to the corresponding sub-directory of the Share internal repository;

- MARC records processing: the delta updates MARC records are processed according to LOD Platform procedures. This process includes enriching MARC records by incorporating various URIs: the Share tenant entity identifier (eg. URIs from the https://svde.org namespace) and URIs from external authoritative sources such as ISNI, VIAF, and LCNAF. Upon request, MARC records can also be enriched with URIs from other tenants of the Share Family. The data are saved in the library tenant Postgres database;

- upload to Solr: the records processed are uploaded to Solr platform for indexing, before populating the tenant. Among the processes involved, data from library records are processed and indexed so that the autocomplete function in the search fields on the discovery portal displays the indexed data (e.g. author, title) as suggested results to the user performing a search for a resource;

- updated data online: after the indexing phase, the information processed is ready to go live on the discovery portal.

What refers to MARC documents can be understood as referring to the different input formats included in the clustering and indexing processes (MODS, METS, Dublin Core, etc.).

The delta updates process triggers: the update of clustered entities on the library tenant portal; the update of the data available on the triplestore, the delivery of enriched MARC records to libraries.