mNo edit summary |

No edit summary |

||

| (12 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

{{DISPLAYTITLE:LOD Platform workflow}} | {{DISPLAYTITLE:LOD Platform workflow}} | ||

== Workflow components == | |||

=== Overview === | |||

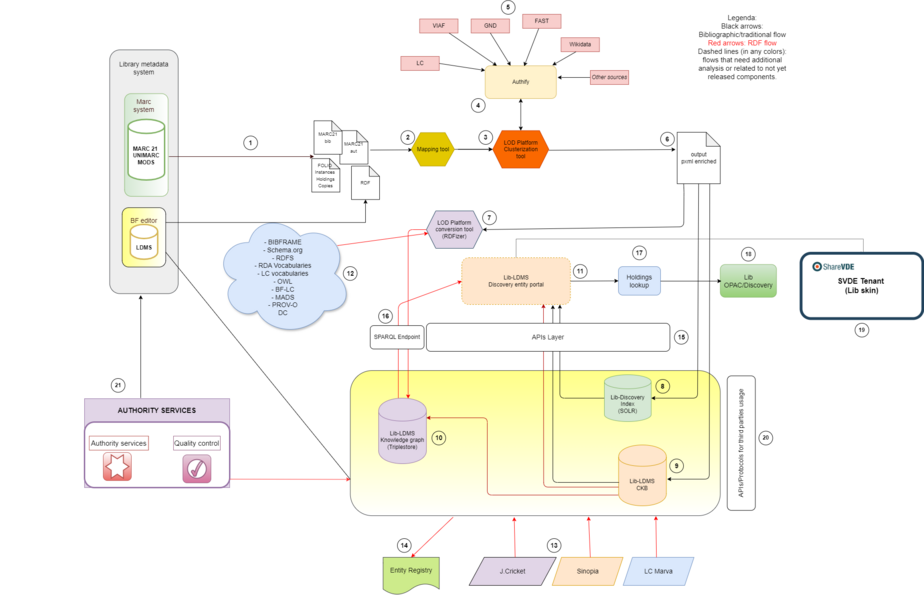

The LOD Platform workflow entails a number of complex actions, from the initial export phase from the source systems where the data are created (eg. original libraries catalogues), until the publication to the discovery interface, intended as a linked data entities discovery portal (eg.https://svde.org) . | The LOD Platform workflow entails a number of complex actions, from the initial export phase from the source systems where the data are created (eg. original libraries catalogues), until the publication to the discovery interface, intended as a linked data entities discovery portal (eg.https://svde.org) . | ||

For ease of reading, the flow is described starting from the source data; the numbers of the paragraphs below identify the individual components of the process shown in the diagram and do not necessarily represent the sequence of actions performed by the system. | For ease of reading, the flow is described starting from the source data; the numbers of the paragraphs below identify the individual components of the process shown in the diagram below, and do not necessarily represent the linear sequence of actions performed by the system. | ||

A '''[https://wiki.share-vde.org/w/images/5/54/share_components_EN.pdf general-purpose summary] of the LOD Platform components and output and a [https://wiki.share-vde.org/w/images/c/c7/Share_Family_data_flow.pdf presentation focussing on each step of the process]''' are also available. | |||

[[File:General-Share-dataflow.png|none|thumb|924x924px|LOD Platform workflow]] | |||

=== Original source data === | |||

'''1. Original source data''', produced in different formats from the institution's system, eg.: | '''1. Original source data''', produced in different formats from the institution's system, eg.: | ||

| Line 17: | Line 20: | ||

- Data in other formats, such as EAD, Dublin Core, MODS, METS, VRA core. | - Data in other formats, such as EAD, Dublin Core, MODS, METS, VRA core. | ||

=== Mapping tool === | |||

'''2. Mapping tool''': the tool allowing to map the source data in different formats, to identify the elements (content and format) useful for subsequent clustering and conversion processes into LOD. The system is sufficiently flexible to allow the extension of the source formats over time, allowing to adapt the clustering and conversion processes in an agile way. This mapping phase, supported, in the case of new formats, by the analysis of domain experts, allows for the adjustment of the clustering and conversion logic in order to accept a wide and rich range of formats. | '''2. Mapping tool''': the tool allowing to map the source data in different formats, to identify the elements (content and format) useful for subsequent clustering and conversion processes into LOD. The system is sufficiently flexible to allow the extension of the source formats over time, allowing to adapt the clustering and conversion processes in an agile way. This mapping phase, supported, in the case of new formats, by the analysis of domain experts, allows for the adjustment of the clustering and conversion logic in order to accept a wide and rich range of formats. | ||

=== Clusterization tool === | |||

'''3. Clusterization tool''': the tool includes the clustering logics of the data coming from different sources often non-homogeneous in order to create the entity as Real-World-Object (RWO) and assign a unique identifier. By clustering we mean the mechanism of identification of the entities with Large Scale Fuzzy Name Matching Techniques, through different text analysis methods such as: | '''3. Clusterization tool''': the tool includes the clustering logics of the data coming from different sources often non-homogeneous in order to create the entity as Real-World-Object (RWO) and assign a unique identifier. By clustering we mean the mechanism of identification of the entities with Large Scale Fuzzy Name Matching Techniques, through different text analysis methods such as: | ||

| Line 50: | Line 55: | ||

* languages; | * languages; | ||

* other data coming from controlled vocabularies. | * other data coming from controlled vocabularies. | ||

=== Clustering process === | |||

'''4. The clustering process''' is completed with the enrichment of data from external sources through the proprietary tool Authify, within the LOD Platform. Authify manages the data sources through different processes depending on the query methods available from the external sources (APIs/WS, Dump, protocols etc.). | '''4. The clustering process''' is completed with the enrichment of data from external sources through the proprietary tool Authify, within the LOD Platform. Authify manages the data sources through different processes depending on the query methods available from the external sources (APIs/WS, Dump, protocols etc.). | ||

| Line 69: | Line 76: | ||

* As a last attempt, the system executes a search by “initials”, in order to find a valid match in those cases when the input string (or the indexed heading) contains the name in its short form. Same as the previous point, this could lead to a response with minor precision. | * As a last attempt, the system executes a search by “initials”, in order to find a valid match in those cases when the input string (or the indexed heading) contains the name in its short form. Same as the previous point, this could lead to a response with minor precision. | ||

In Authify, a complete hyperlinking process is created and the final result is a much richer and well-identified entity than the original one. | In Authify, a complete hyperlinking process is created and the final result is a much richer and well-identified entity than the original one. | ||

=== Authify === | |||

'''5. Authify''' - As mentioned before, Authify manages different external sources for clusterization/enrichment processes. At the beginning of each new project, a check list of the most relevant sources for the specific project is done with the target library in order to identify new available sources to be included in the process. | '''5. Authify''' - As mentioned before, Authify manages different external sources for clusterization/enrichment processes. At the beginning of each new project, a check list of the most relevant sources for the specific project is done with the target library in order to identify new available sources to be included in the process. | ||

=== Reconciliation and enrichment process === | |||

'''6. The clusterization/reconciliation/enrichment processes''' have as output a pxml file, a proprietary file format defined to express the richness of data in a standard way. This file is used to feed two distinct processes, the LOD conversion and the text indexing into SOLR. | '''6. The clusterization/reconciliation/enrichment processes''' have as output a pxml file, a proprietary file format defined to express the richness of data in a standard way. This file is used to feed two distinct processes, the LOD conversion and the text indexing into SOLR. | ||

=== RDFizer === | |||

'''7. RDFizer''' - The LOD Platform RDF conversion tool. RDFizer is a RESTFul module that automates the entire process of converting and publishing data in RDF according to the BIBFRAME 2.0 ontology in a linear and scalable way. It is flexible and adaptable to multiple situations: it allows, therefore, to manage the classes and properties not only of BIBFRAME but also of other ontologies as needed. RDFizer works strictly in conjunction with other LOD Platform tools and components such as Authify, the database of relationships and the Cluster Knowledge Base. The platform represents an enhanced and expanded version of the ALIADA framework (mentioned in the previous section) within an infrastructure that is better adapted to handle large amounts of data. The enriched pxml file cited in the item 6) acts as the input for RDFizer, which translates it into triples and uploads them to the selected triplestore. Upon completion, the RDF data can be extracted as a Turtle file by using the APIs provided by the triplestore. | '''7. RDFizer''' - The LOD Platform RDF conversion tool. RDFizer is a RESTFul module that automates the entire process of converting and publishing data in RDF according to the BIBFRAME 2.0 ontology in a linear and scalable way. It is flexible and adaptable to multiple situations: it allows, therefore, to manage the classes and properties not only of BIBFRAME but also of other ontologies as needed. RDFizer works strictly in conjunction with other LOD Platform tools and components such as Authify, the database of relationships and the Cluster Knowledge Base. The platform represents an enhanced and expanded version of the ALIADA framework (mentioned in the previous section) within an infrastructure that is better adapted to handle large amounts of data. The enriched pxml file cited in the item 6) acts as the input for RDFizer, which translates it into triples and uploads them to the selected triplestore. Upon completion, the RDF data can be extracted as a Turtle file by using the APIs provided by the triplestore. | ||

| Line 83: | Line 93: | ||

- Record Conversion: converts bibliographical data obtained from an enriched MARC file into a triple. | - Record Conversion: converts bibliographical data obtained from an enriched MARC file into a triple. | ||

=== Discovery index (SOLR) === | |||

'''8. Discovery Index (SOLR)''' - The same pxml file is used for indexing in the inverted index of SOLR: this search engine, used in combination with the triplestore for the presentation of data in the search portal, allows to enormously extend the entities search and retrieval. Combined with what is made available by the triplestore, it allows the end user to access the data by having the entity as the subject of the research, and no longer the bibliographic or authority records. A complex and extended knowledge panel will be proposed for each entity addressed in the system, to show its attributes and the rich network of relationships with other entities, in a way that tries to combine the richness of data with the user friendly and intuitive discovery. A long list of search and retrieve logics, offered by the SOLR system, can be applied to extend the search capabilities of the system. | '''8. Discovery Index (SOLR)''' - The same pxml file is used for indexing in the inverted index of SOLR: this search engine, used in combination with the triplestore for the presentation of data in the search portal, allows to enormously extend the entities search and retrieval. Combined with what is made available by the triplestore, it allows the end user to access the data by having the entity as the subject of the research, and no longer the bibliographic or authority records. A complex and extended knowledge panel will be proposed for each entity addressed in the system, to show its attributes and the rich network of relationships with other entities, in a way that tries to combine the richness of data with the user friendly and intuitive discovery. A long list of search and retrieve logics, offered by the SOLR system, can be applied to extend the search capabilities of the system. | ||

=== Cluster Knowledge Base (Entity Knowledge Base) === | |||

'''9. Cluster Knowledge Base (CKB), or Entity Knowledge Base'''. The Cluster Knowledge Base on PostgreSQL database and the corresponding RDF version are the result of the data processing and enrichment procedures with external data sources for each entity; typically: clusters of Agents (authorized and variant forms of the names of Persons, Institutions, Families) and clusters of Works (authorized access points and variant forms for the titles of the Works and Instances). The CKB is populated with clusters of all the linked data entities that are created within the specific project that uses the LOD Platform. Such clusters derive from the reconciliation and clustering of the bibliographic and authority records (both records internal to the library system and from external sources) to form groups of resources that are converted to linked data to represent a real world object. | '''9. Cluster Knowledge Base (CKB), or Entity Knowledge Base'''. The Cluster Knowledge Base on PostgreSQL database and the corresponding RDF version are the result of the data processing and enrichment procedures with external data sources for each entity; typically: clusters of Agents (authorized and variant forms of the names of Persons, Institutions, Families) and clusters of Works (authorized access points and variant forms for the titles of the Works and Instances). The CKB is populated with clusters of all the linked data entities that are created within the specific project that uses the LOD Platform. Such clusters derive from the reconciliation and clustering of the bibliographic and authority records (both records internal to the library system and from external sources) to form groups of resources that are converted to linked data to represent a real world object. | ||

The CKB is the pool where new entities are collected, as the clustering processes go along. The CKB is the authoritative source of the system and it’s available both on the relational database PostgreSQL (mostly for internal maintenance purposes, reports etc.), as well as in RDF in order to be used for the Entity Discovery Interface (the end-users portal) and public exposure. The CKB is updated both through automated procedures, as well as through manual actions via JCricket, the entity editor. Each change performed on the CKB (both manual and automatic) is reported in the Entity registry, that has the key role of keeping track of every variation of the resource URI, in order to guarantee the effective and broad sharing of resources. | The CKB is the pool where new entities are collected, as the clustering processes go along. The CKB is the authoritative source of the system and it’s available both on the relational database PostgreSQL (mostly for internal maintenance purposes, reports etc.), as well as in RDF in order to be used for the Entity Discovery Interface (the end-users portal) and public exposure. The CKB is updated both through automated procedures, as well as through manual actions via JCricket, the entity editor. Each change performed on the CKB (both manual and automatic) is reported in the Entity registry, that has the key role of keeping track of every variation of the resource URI, in order to guarantee the effective and broad sharing of resources. | ||

=== Triple store === | |||

'''10. Triple store'''. The data converted to RDF according to the agreed entity model (BIBFRAME 2.0 as core ontology, and enriched with additional ontologies) are indexed in the triple store. Also the data stored in the triple store can vary (according to the update cycles defined by the target library), both through manual and automatic procedures, via the entity editor. | '''10. Triple store'''. The data converted to RDF according to the agreed entity model (BIBFRAME 2.0 as core ontology, and enriched with additional ontologies) are indexed in the triple store. Also the data stored in the triple store can vary (according to the update cycles defined by the target library), both through manual and automatic procedures, via the entity editor. | ||

'''11.''' '''Entity discovery portal''' - The Discovery Portal | === Entity discovery portal === | ||

'''11.''' '''Entity discovery portal''' - The data are published to the Entity Discovery Portal. The Discovery Portal will harness the potential of linked data to offer an easy and intuitive user experience and deliver ever more wide-ranging and detailed search results to library patrons and library staff, basing on the BIBFRAME data model with ad hoc extensions. | |||

The design focus is to provide intuitive access to complex data and make BIBFRAME easy to understand and benefit from. In order to achieve this goal, a two-way process has been established within the international Share Family community, where stakeholders and UX designers continuously provide feedback to each other in iterative phases. Moreover, input from real users has been gathered to shape design around users' needs (i.e. library patrons, mostly university students), at the same time considering the requirements from librarians that play a key role in the wider linked data community. | The design focus is to provide intuitive access to complex data and make BIBFRAME easy to understand and benefit from. In order to achieve this goal, a two-way process has been established within the international Share Family community, where stakeholders and UX designers continuously provide feedback to each other in iterative phases. Moreover, input from real users has been gathered to shape design around users' needs (i.e. library patrons, mostly university students), at the same time considering the requirements from librarians that play a key role in the wider linked data community. | ||

<span class="col- | <span class="col-black">More detailed information is provided in</span> [[ShareDoc:PublicDocumentation/LODPlatform/DiscoveryInterface]]. | ||

=== Ontologies and controlled vocabularies === | |||

'''12. Ontologies and controlled vocabularies''' used in the project - The data modeling in a LOD project is one of the most delicate and crucial aspects of the whole data management process. The conversion tool, RDFizer, is built in a way that allows to extend it, following an approach as open as possible to receive and manage changes/extensions in existing ontologies and the inclusion of new ontologies and controlled vocabularies. Currently, the conversion tool uses the following ontologies: | '''12. Ontologies and controlled vocabularies''' used in the project - The data modeling in a LOD project is one of the most delicate and crucial aspects of the whole data management process. The conversion tool, RDFizer, is built in a way that allows to extend it, following an approach as open as possible to receive and manage changes/extensions in existing ontologies and the inclusion of new ontologies and controlled vocabularies. Currently, the conversion tool uses the following ontologies: | ||

| Line 111: | Line 126: | ||

The peculiarity of the LOD Platform is the ability to handle different ontologies and vocabularies. An example of such flexibility is the data model used in the Share Family environments that combines the BIBFRAME oriented approach with the IFLA-LRM oriented approach. This has been done in order to foster interoperability among the community of Share Family libraries that comprises different entity modelling practices, and between other projects that apply pure BIBFRAME model. | The peculiarity of the LOD Platform is the ability to handle different ontologies and vocabularies. An example of such flexibility is the data model used in the Share Family environments that combines the BIBFRAME oriented approach with the IFLA-LRM oriented approach. This has been done in order to foster interoperability among the community of Share Family libraries that comprises different entity modelling practices, and between other projects that apply pure BIBFRAME model. | ||

The current evolution of this work is the SVDE Ontology, an extension to BIBFRAME modelled within Share Family initiative. While the ontology supports the discovery functionality of the Share family search systems, it may be re-used in any system requiring a bridge among BIBFRAME, IFLA LRM and RDA. The preliminary version of the SVDE Ontology has been published and can be consulted at https://doi.org/10.5281/zenodo.8332350. | The current evolution of this work is the SVDE Ontology, an extension to BIBFRAME modelled within Share Family initiative. While the ontology supports the discovery functionality of the Share family search systems, it may be re-used in any system requiring a bridge among BIBFRAME, IFLA LRM and RDA. The preliminary version of the SVDE Ontology has been published and can be consulted at https://doi.org/10.5281/zenodo.8332350. | ||

'''13. JCricket: the entities editor''' - All data produced by automatic conversion processes and stored in the Cluster Knowledge Base can be modified manually, to better address the issue of data quality. Within the Share Family initiative, a cluster/entity editor was designed in a joint effort with member institutions. <span class="col- | === JCricket EntityEditor === | ||

'''13. JCricket: the entities editor''' - All data produced by automatic conversion processes and stored in the Cluster Knowledge Base can be modified manually, to better address the issue of data quality. Within the Share Family initiative, a cluster/entity editor was designed in a joint effort with member institutions. <span class="col-black">For an overview of how it has been developed, refer to</span> [[ShareDoc:PublicDocumentation/LODPlatform/EntityEditor]] . As already mentioned, all changes in the clusters, also through manual actions, are reported in the Entity registry. | |||

=== Entity registry === | |||

'''14. Entity registry''' - As mentioned before, the management and tracking of changes to the URIs associated with each CKB cluster is entrusted to the Entity registry; as suggested by the name itself, the Entity registry is a special tool in which the association between clusters and the URIs that identify such clusters is registered, and where all the changes affecting this association are reported. An interesting example is the Redirect, that is the registration of the redirect from a cluster no longer valid to a valid one: this guarantees the recovery of the entities and their persistent identification even in the presence of heavy cluster modifications, such as the merge/matching process. | '''14. Entity registry''' - As mentioned before, the management and tracking of changes to the URIs associated with each CKB cluster is entrusted to the Entity registry; as suggested by the name itself, the Entity registry is a special tool in which the association between clusters and the URIs that identify such clusters is registered, and where all the changes affecting this association are reported. An interesting example is the Redirect, that is the registration of the redirect from a cluster no longer valid to a valid one: this guarantees the recovery of the entities and their persistent identification even in the presence of heavy cluster modifications, such as the merge/matching process. | ||

| Line 120: | Line 137: | ||

* Active: the cluster is active when it is fed by bibliographic records and, consequently, it is visible in the user interface of the Entity Discovery Interface portal. | * Active: the cluster is active when it is fed by bibliographic records and, consequently, it is visible in the user interface of the Entity Discovery Interface portal. | ||

* Inactive: the cluster is inactive when it is fed only by authority records or when it is not fed by any record. Inactive clusters, although they cannot be displayed in the user interface of the | * Inactive: the cluster is inactive when it is fed only by authority records or when it is not fed by any record. Inactive clusters, although they cannot be displayed in the user interface of the discovery portal, are involved in the same way as active clusters in the clustering processes and can change their status in the case of association with bibliographic records. | ||

* Incorrect: the designation of a cluster as "incorrect" is entrusted to a special flag which, unlike the "active" and "inactive" status, can only be activated by manual modification via the CKB management module, defined as CKB editor. The use of the flag makes the cluster unusable (invalidation) and allows to activate the redirect mechanism from the wrong cluster to a valid cluster by creating an uri_alias. The invalidation action makes the cluster unusable and disables any form of modification both through the CKB editor, or through automatic delta updates processes. | * Incorrect: the designation of a cluster as "incorrect" is entrusted to a special flag which, unlike the "active" and "inactive" status, can only be activated by manual modification via the CKB management module, defined as CKB editor. The use of the flag makes the cluster unusable (invalidation) and allows to activate the redirect mechanism from the wrong cluster to a valid cluster by creating an uri_alias. The invalidation action makes the cluster unusable and disables any form of modification both through the CKB editor, or through automatic delta updates processes. | ||

| Line 132: | Line 149: | ||

* manual: through the use of JCricket. | * manual: through the use of JCricket. | ||

=== APIs layer === | |||

'''15. APIs Layer''' - the data produced in the conversion or creation workflow are accessible through an API layer that will offer 2 interfaces to query the entities: a REST APIs layer and a GraphQL layer. In the REST API several endpoints serve different request types. In the GraphQL layer the same endpoint will perform the queries, using a language similar to SQL. GraphQL is a query language which allows to expose the underlying dataset as a graph. That allows a powerful mechanism for introspecting the entities and the relationships that form the domain model. The same entities are also exposed using a RESTFul paradigm, where a persistent URI is assigned to each entity and HTTP verbs are used for querying / manipulating the dataset. For more details on the APIs, see [[ShareDoc:PublicDocumentation/APIs]]. | '''15. APIs Layer''' - the data produced in the conversion or creation workflow are accessible through an API layer that will offer 2 interfaces to query the entities: a REST APIs layer and a GraphQL layer. In the REST API several endpoints serve different request types. In the GraphQL layer the same endpoint will perform the queries, using a language similar to SQL. GraphQL is a query language which allows to expose the underlying dataset as a graph. That allows a powerful mechanism for introspecting the entities and the relationships that form the domain model. The same entities are also exposed using a RESTFul paradigm, where a persistent URI is assigned to each entity and HTTP verbs are used for querying / manipulating the dataset. For more details on the APIs, see [[ShareDoc:PublicDocumentation/APIs]]. | ||

=== SPARQL endpoint === | |||

'''16. SPARQL endpoint''' - The data are exposed also via a SPARQL endpoint that is available for queries. The current set-up includes two different methods for searching and viewing the data, ie.: | '''16. SPARQL endpoint''' - The data are exposed also via a SPARQL endpoint that is available for queries. The current set-up includes two different methods for searching and viewing the data, ie.: | ||

| Line 143: | Line 160: | ||

To access the triple store and consult the data contact [mailto:info@svde.org info@svde.org]. | To access the triple store and consult the data contact [mailto:info@svde.org info@svde.org]. | ||

=== Holdings Lookup === | |||

'''17. Holdings Lookup''' - Entity Discovery Portal will also display holding data, integrating local library data to retrieve holding information. | '''17. Holdings Lookup''' - Entity Discovery Portal will also display holding data, integrating local library data to retrieve holding information. | ||

=== Local OPAC discovery === | |||

'''18.''' The entity discovery portal will be connected with the local OPAC of individual institutions. | |||

=== Institutional / skin portal configuration === | |||

'''19. Institutional/skin portal'''. The Entity Discovery Portal where the end-users linked data descriptions are published provides several configuration options that can vary across institutions or across tenants, see [[ShareDoc:PublicDocumentation/LODPlatform/DiscoveryInterface#Configuration options]]. | |||

=== APIs/Protocols for third parties usage === | |||

'''20. APIs/Protocols for third parties usage.''' To close the loop, the data elaborated in the LOD Platform workflow will be made available to libraries for their use, through protocols including APIs, OAI-PMH, Atom feeds and Activity stream. | |||

=== Authority services === | |||

'''21.''' '''Authority services'''. Services for the authority control can be connected to the MARC and BIBFRAME data workflow. | |||

== Technological stack == | |||

The LOD Platform is supported by a range of technologies summarised in this presentation [https://wiki.share-vde.org/w/images/b/bc/SVDE_tech_stack_-_Back_end_%26_Front_end.pdf LOD Platform technological stack]. | |||

__FORCETOC__ | __FORCETOC__ | ||

Latest revision as of 09:49, 31 May 2024

Workflow components

Overview

The LOD Platform workflow entails a number of complex actions, from the initial export phase from the source systems where the data are created (eg. original libraries catalogues), until the publication to the discovery interface, intended as a linked data entities discovery portal (eg.https://svde.org) .

For ease of reading, the flow is described starting from the source data; the numbers of the paragraphs below identify the individual components of the process shown in the diagram below, and do not necessarily represent the linear sequence of actions performed by the system.

A general-purpose summary of the LOD Platform components and output and a presentation focussing on each step of the process are also available.

Original source data

1. Original source data, produced in different formats from the institution's system, eg.:

- MARC bibliographic data from the Library Management System;

- MARC authority data;

- Data in other formats, such as EAD, Dublin Core, MODS, METS, VRA core.

Mapping tool

2. Mapping tool: the tool allowing to map the source data in different formats, to identify the elements (content and format) useful for subsequent clustering and conversion processes into LOD. The system is sufficiently flexible to allow the extension of the source formats over time, allowing to adapt the clustering and conversion processes in an agile way. This mapping phase, supported, in the case of new formats, by the analysis of domain experts, allows for the adjustment of the clustering and conversion logic in order to accept a wide and rich range of formats.

Clusterization tool

3. Clusterization tool: the tool includes the clustering logics of the data coming from different sources often non-homogeneous in order to create the entity as Real-World-Object (RWO) and assign a unique identifier. By clustering we mean the mechanism of identification of the entities with Large Scale Fuzzy Name Matching Techniques, through different text analysis methods such as:

- Common key

- List

- Edit distance

- Statistical similarity

- Word embedding

These methods tackle issues about data identification, among them: similar names, split database fields, phonetic similarity, spelling differences, truncated components, titles and honorifics, initials and nicknames, etc.

Other logics support the creation of a cluster / entity as well as the definition of the preferred form, among the many possible variants, to be assigned during the data presentation phase, including:

- access point size/weight

- usage count

- identifiers presence, etc.

The process produces a cluster of data in which the many possible variant forms of a name (of whatever entity it is) are reconciled and collected in a cluster, which is assigned a unique identifier (proprietary URI). The entities already managed with clustering processes are the following:

- Agent (Person, Organization, Family)

- Work

- Instance

- Item (work-in-progress)

- Place

- Subject

- Topics: we currently have algorithms and processes to enrich a topic with external URIs from external sources (such as FAST or LCSH) using mostly string-matching logics. Intense work is being carried on to enrich terms from subject strings with Wikidata and other international sources; this allows to expand the number of identifiers, thus partially overcoming the issue of different alphabets and languages and reducing the risk of creating clusters that seem to identify the same entity but actually don’t (entity recognition process).

In addition, some “domain” entities are managed, such as:

- occupations;

- roles;

- languages;

- other data coming from controlled vocabularies.

Clustering process

4. The clustering process is completed with the enrichment of data from external sources through the proprietary tool Authify, within the LOD Platform. Authify manages the data sources through different processes depending on the query methods available from the external sources (APIs/WS, Dump, protocols etc.).

Authify is a RESTFul module that offers several search and detection services. At the very beginning, the LOD Platform project aimed at overcoming some limitations of the public VIAF Web API with the goal to obtain more comprehensive and precise URI retrieval results. VIAF, being a public project, doesn’t allow a massive invocation of its API: for those use cases where such a requirement is needed, the project provides a download of the whole dataset.

That was the main reason why Authify was implemented: indexing and storing the VIAF clusters dataset and providing, on top of that, powerful full-text and bibliographic search services. Other sources will be added progressively, to answer different libraries’ needs.

The Authify Cluster Search Services provide, as the name suggests, a full-text search service among names and works clusters. The search Web API uses, behind the scenes, an “invisible queries” approach in order to try and find a match, as precise as possible, within the managed clusters.

Thanks to the invisible queries approach, everything is more transparent to the caller:

on top of a single search request, the system executes a chain of different search strategies with different priorities, and the first match that produces a result populates the response that is returned. For debugging purposes, the response also includes the matching strategy that produced the results.

The system has been built in order to be flexible and extensible, so the chain mentioned above is fully configurable; for instance, here’s a brief description of the current configuration when searching names clusters:

- Subfields matching: the query language allows the caller to specify the source tag/subfields that compose the heading (which is the actual input query string). If those subfields are found within the query string, the system tries to find an exact match with their values;

- Input heading exact match: the system tries to find an exact match with the provided query string;

- FullText search: if exact match is not possible, then a regular full text search is executed, with things like proximity search for names (e.g. Bertrand Meyer = Meyer Bertrand), special detection for some entities (e.g. birth and death dates). Differently from the previous strategies, here precision is lower and it’s possible for this strategy to return more than one result: in this case the response will contain the total number of matched items and a ranking assigned to each cluster;

- As a last attempt, the system executes a search by “initials”, in order to find a valid match in those cases when the input string (or the indexed heading) contains the name in its short form. Same as the previous point, this could lead to a response with minor precision.

In Authify, a complete hyperlinking process is created and the final result is a much richer and well-identified entity than the original one.

Authify

5. Authify - As mentioned before, Authify manages different external sources for clusterization/enrichment processes. At the beginning of each new project, a check list of the most relevant sources for the specific project is done with the target library in order to identify new available sources to be included in the process.

Reconciliation and enrichment process

6. The clusterization/reconciliation/enrichment processes have as output a pxml file, a proprietary file format defined to express the richness of data in a standard way. This file is used to feed two distinct processes, the LOD conversion and the text indexing into SOLR.

RDFizer

7. RDFizer - The LOD Platform RDF conversion tool. RDFizer is a RESTFul module that automates the entire process of converting and publishing data in RDF according to the BIBFRAME 2.0 ontology in a linear and scalable way. It is flexible and adaptable to multiple situations: it allows, therefore, to manage the classes and properties not only of BIBFRAME but also of other ontologies as needed. RDFizer works strictly in conjunction with other LOD Platform tools and components such as Authify, the database of relationships and the Cluster Knowledge Base. The platform represents an enhanced and expanded version of the ALIADA framework (mentioned in the previous section) within an infrastructure that is better adapted to handle large amounts of data. The enriched pxml file cited in the item 6) acts as the input for RDFizer, which translates it into triples and uploads them to the selected triplestore. Upon completion, the RDF data can be extracted as a Turtle file by using the APIs provided by the triplestore.

RDFizer manages two conversion procedures:

- Cluster Conversion: converts data obtained from the cluster enrichment process;

- Record Conversion: converts bibliographical data obtained from an enriched MARC file into a triple.

Discovery index (SOLR)

8. Discovery Index (SOLR) - The same pxml file is used for indexing in the inverted index of SOLR: this search engine, used in combination with the triplestore for the presentation of data in the search portal, allows to enormously extend the entities search and retrieval. Combined with what is made available by the triplestore, it allows the end user to access the data by having the entity as the subject of the research, and no longer the bibliographic or authority records. A complex and extended knowledge panel will be proposed for each entity addressed in the system, to show its attributes and the rich network of relationships with other entities, in a way that tries to combine the richness of data with the user friendly and intuitive discovery. A long list of search and retrieve logics, offered by the SOLR system, can be applied to extend the search capabilities of the system.

Cluster Knowledge Base (Entity Knowledge Base)

9. Cluster Knowledge Base (CKB), or Entity Knowledge Base. The Cluster Knowledge Base on PostgreSQL database and the corresponding RDF version are the result of the data processing and enrichment procedures with external data sources for each entity; typically: clusters of Agents (authorized and variant forms of the names of Persons, Institutions, Families) and clusters of Works (authorized access points and variant forms for the titles of the Works and Instances). The CKB is populated with clusters of all the linked data entities that are created within the specific project that uses the LOD Platform. Such clusters derive from the reconciliation and clustering of the bibliographic and authority records (both records internal to the library system and from external sources) to form groups of resources that are converted to linked data to represent a real world object.

The CKB is the pool where new entities are collected, as the clustering processes go along. The CKB is the authoritative source of the system and it’s available both on the relational database PostgreSQL (mostly for internal maintenance purposes, reports etc.), as well as in RDF in order to be used for the Entity Discovery Interface (the end-users portal) and public exposure. The CKB is updated both through automated procedures, as well as through manual actions via JCricket, the entity editor. Each change performed on the CKB (both manual and automatic) is reported in the Entity registry, that has the key role of keeping track of every variation of the resource URI, in order to guarantee the effective and broad sharing of resources.

Triple store

10. Triple store. The data converted to RDF according to the agreed entity model (BIBFRAME 2.0 as core ontology, and enriched with additional ontologies) are indexed in the triple store. Also the data stored in the triple store can vary (according to the update cycles defined by the target library), both through manual and automatic procedures, via the entity editor.

Entity discovery portal

11. Entity discovery portal - The data are published to the Entity Discovery Portal. The Discovery Portal will harness the potential of linked data to offer an easy and intuitive user experience and deliver ever more wide-ranging and detailed search results to library patrons and library staff, basing on the BIBFRAME data model with ad hoc extensions.

The design focus is to provide intuitive access to complex data and make BIBFRAME easy to understand and benefit from. In order to achieve this goal, a two-way process has been established within the international Share Family community, where stakeholders and UX designers continuously provide feedback to each other in iterative phases. Moreover, input from real users has been gathered to shape design around users' needs (i.e. library patrons, mostly university students), at the same time considering the requirements from librarians that play a key role in the wider linked data community.

More detailed information is provided in ShareDoc:PublicDocumentation/LODPlatform/DiscoveryInterface.

Ontologies and controlled vocabularies

12. Ontologies and controlled vocabularies used in the project - The data modeling in a LOD project is one of the most delicate and crucial aspects of the whole data management process. The conversion tool, RDFizer, is built in a way that allows to extend it, following an approach as open as possible to receive and manage changes/extensions in existing ontologies and the inclusion of new ontologies and controlled vocabularies. Currently, the conversion tool uses the following ontologies:

- Bibframe, in its version 2.0

- BF-LC

- RDFS

- OWL

- MADS

- PROV-O

- RDA Vocabularies

- LC Vocabularies

The peculiarity of the LOD Platform is the ability to handle different ontologies and vocabularies. An example of such flexibility is the data model used in the Share Family environments that combines the BIBFRAME oriented approach with the IFLA-LRM oriented approach. This has been done in order to foster interoperability among the community of Share Family libraries that comprises different entity modelling practices, and between other projects that apply pure BIBFRAME model.

The current evolution of this work is the SVDE Ontology, an extension to BIBFRAME modelled within Share Family initiative. While the ontology supports the discovery functionality of the Share family search systems, it may be re-used in any system requiring a bridge among BIBFRAME, IFLA LRM and RDA. The preliminary version of the SVDE Ontology has been published and can be consulted at https://doi.org/10.5281/zenodo.8332350.

JCricket EntityEditor

13. JCricket: the entities editor - All data produced by automatic conversion processes and stored in the Cluster Knowledge Base can be modified manually, to better address the issue of data quality. Within the Share Family initiative, a cluster/entity editor was designed in a joint effort with member institutions. For an overview of how it has been developed, refer to ShareDoc:PublicDocumentation/LODPlatform/EntityEditor . As already mentioned, all changes in the clusters, also through manual actions, are reported in the Entity registry.

Entity registry

14. Entity registry - As mentioned before, the management and tracking of changes to the URIs associated with each CKB cluster is entrusted to the Entity registry; as suggested by the name itself, the Entity registry is a special tool in which the association between clusters and the URIs that identify such clusters is registered, and where all the changes affecting this association are reported. An interesting example is the Redirect, that is the registration of the redirect from a cluster no longer valid to a valid one: this guarantees the recovery of the entities and their persistent identification even in the presence of heavy cluster modifications, such as the merge/matching process.

The status of the cluster is also reported in the Entity registry, and it can be:

- Active: the cluster is active when it is fed by bibliographic records and, consequently, it is visible in the user interface of the Entity Discovery Interface portal.

- Inactive: the cluster is inactive when it is fed only by authority records or when it is not fed by any record. Inactive clusters, although they cannot be displayed in the user interface of the discovery portal, are involved in the same way as active clusters in the clustering processes and can change their status in the case of association with bibliographic records.

- Incorrect: the designation of a cluster as "incorrect" is entrusted to a special flag which, unlike the "active" and "inactive" status, can only be activated by manual modification via the CKB management module, defined as CKB editor. The use of the flag makes the cluster unusable (invalidation) and allows to activate the redirect mechanism from the wrong cluster to a valid cluster by creating an uri_alias. The invalidation action makes the cluster unusable and disables any form of modification both through the CKB editor, or through automatic delta updates processes.

Both active and inactive clusters must be visible in JCricket, while the attribution of “wrong” status implies that the cluster is not displayed in the user interface.

The definition of the statuses allows an easy management of the clusters, with distinction between clusters that may or may not be displayed in the discovery portal, but most of all it guarantees the maintenance of the clusters that are no longer fed by any records, thus avoiding their cancellation and ensuring full correspondence between URIs and clusters.

There are two types of processes that can change clusters and their URIs:

- automatic: these are periodical processes according to the libraries schedule, and are activated to manage the "delta" of the data;

- manual: through the use of JCricket.

APIs layer

15. APIs Layer - the data produced in the conversion or creation workflow are accessible through an API layer that will offer 2 interfaces to query the entities: a REST APIs layer and a GraphQL layer. In the REST API several endpoints serve different request types. In the GraphQL layer the same endpoint will perform the queries, using a language similar to SQL. GraphQL is a query language which allows to expose the underlying dataset as a graph. That allows a powerful mechanism for introspecting the entities and the relationships that form the domain model. The same entities are also exposed using a RESTFul paradigm, where a persistent URI is assigned to each entity and HTTP verbs are used for querying / manipulating the dataset. For more details on the APIs, see ShareDoc:PublicDocumentation/APIs.

SPARQL endpoint

16. SPARQL endpoint - The data are exposed also via a SPARQL endpoint that is available for queries. The current set-up includes two different methods for searching and viewing the data, ie.:

- graphic user interface SPARQL UI Console at https://data-staging.svde.org: it's the graphic end user interface where the dataset loaded to the triple store can be selected; selecting "Query" in the user menu opens the query interface;

- direct access to SPARQL Endpoint at https://data-staging.svde.org/sparql: it's the HTTP endpoint to run queries directly on the dataset.

To access the triple store and consult the data contact info@svde.org.

Holdings Lookup

17. Holdings Lookup - Entity Discovery Portal will also display holding data, integrating local library data to retrieve holding information.

Local OPAC discovery

18. The entity discovery portal will be connected with the local OPAC of individual institutions.

Institutional / skin portal configuration

19. Institutional/skin portal. The Entity Discovery Portal where the end-users linked data descriptions are published provides several configuration options that can vary across institutions or across tenants, see ShareDoc:PublicDocumentation/LODPlatform/DiscoveryInterface#Configuration options.

APIs/Protocols for third parties usage

20. APIs/Protocols for third parties usage. To close the loop, the data elaborated in the LOD Platform workflow will be made available to libraries for their use, through protocols including APIs, OAI-PMH, Atom feeds and Activity stream.

Authority services

21. Authority services. Services for the authority control can be connected to the MARC and BIBFRAME data workflow.

Technological stack

The LOD Platform is supported by a range of technologies summarised in this presentation LOD Platform technological stack.